The European Commission has just presented a package to simplify digital regulation, with the declared aim of easing legislation relating to artificial intelligence, cybersecurity and data protection and management, a majority request of the European technology sector. In practice, the so-called digital omnibus postpones transparency requirements and sanctions against tech companies for failing to control applications classified as dangerous, which could erode the ambitious ethical vision that inspired the European Artificial Intelligence Regulation of August 2024. The initiative has been interpreted as a symptom of how Europe’s regulatory ambition may be slowed by pressure from big companies and transatlantic geopolitics. It occurs even after Europe has given in to corporations and lowered its environmental demands.

Brussels insists that the central objective of the legislative package is to radically ease the regulatory burden on citizens and businesses, correcting duplications, legal gaps and tensions detected during the first phase of implementation of digital legislation in Europe. Citizens are promised fewer acceptance clicks and more effective control over their data. Companies, for their part, will have a one-stop shop to report cybersecurity incidents and also a unique digital identity, facilitating, among other things, cross-border procedures and operations. This simplification, according to Brussels, will allow companies to save up to 5 billion euros between now and 2029, time they will be able to dedicate to innovation. The declaration thus recognizes legitimacy to the reproach that companies direct at the community authorities for the excess of bureaucracy in the European Union and which the Draghi report of September 2024 identified as one of the main obstacles to growth and competitiveness.

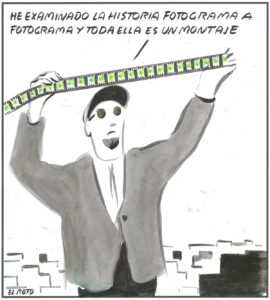

One of the most critical points of the new Regulation is linked to the development of artificial intelligence models related to safety, health or fundamental rights. These are tools that serve fundamental services such as, for example, biometric identification, consumer credit rating, migration management or the security of critical infrastructures. These AI systems considered high risk were supposed to have permanent supervision starting from August 2026, a period that now extends until December 2027. This delay may be necessary for the development of the sector in Europe, but it generates justified uncertainty about the future of regulations essential for citizens’ autonomy and privacy. In essence, the Commission is committed to improving the performance of the European companies that develop these models startups and SMEs. There is a risk that the attempt to reduce the competitive gap with the United States will end up benefiting North American companies if new regulations allow them to expand their current advantage.

This new digital framework still needs to receive the green light from member countries and the European Parliament. We welcome the debate to accelerate the development of an essential technology for the future, but it should not call into question the ethical foundations of European regulation. The EU must refine its measures to prevent the urge to compete and innovate from occurring at the expense of rights, social guarantees and technological ethics.