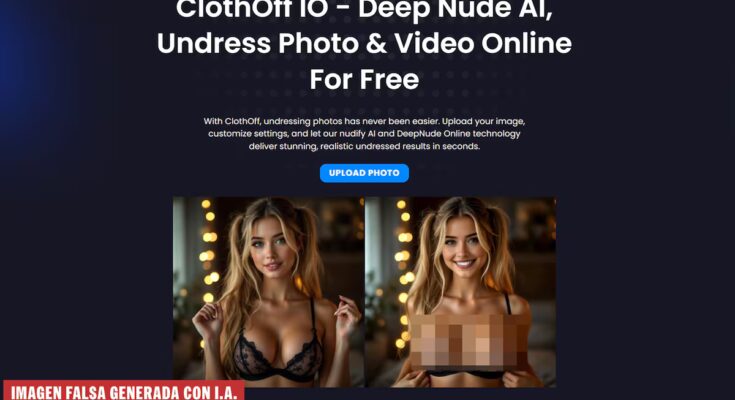

In October 2023, some young people from a high school in New Jersey (USA) took a photo from a classmate’s Instagram and transmitted it via ClothOff, an application that generates naked bodies with artificial intelligence. They then showed it to classmates and it was distributed on Snapchat. The suspects were not criminally charged for their actions because they did not want to talk or hand over their devices to authorities. The young woman, who was 14 years old at the time, was afraid of returning to the corridors of the center and lives in “perpetual fear” that her false image could be revived on the internet. Now he has sued ClothOff, the supposedly Belarus-based company that is behind a group of apps under different names that strip themselves of artificial intelligence.

The lawsuit seeks to “permanently shut down ClothOff’s operations,” says Brina Harden, a fellow at the Center for Media Freedom and Access to Information at Yale University and a member of the plaintiff’s team along with collegiate lawyers. “This company exists solely to profit from AI-facilitated sexual exploitation and abuse,” he adds.

The complaint explains how the use of these services continues to grow: “Sites like ClothOff only gain reach and popularity,” says Harden, and add new features such as videos of victims or sexual positions of their bodies. In their text they cite data from this app of 27 million users in the first months of 2024 and the daily generation of around 200,000 images. Its business model is underwriting or purchasing credits.

The example case of Spain

The lawsuit cites the Spanish Almendralejo case, which occurred just a month earlier. “When this happened to us, no one even knew what to profound falsehood”, says Miriam Al Adib, a gynecologist and mother of one of the affected teenagers in the Extremaduran city. “Thanks to that media bomb here they took the matter seriously because at the beginning not even the police knew what to do with it. Now at least they know that they can report it because when they treated us the officer told us that it wasn’t real, that it was artificial intelligence”, he adds. The young people of Almendralejo were accused of child pornography and moral integrity. In the United States, since May, there has been a law against the publication of intimate images without consent, including those generated by artificial intelligence.

“I didn’t know you could also report directly to the app“, says Al Adib. The goal of the American complaint is to eliminate access to these apps on the Internet: “This lawsuit aims to permanently shut down ClothOff’s activities. If we win the case, we plan to use every means possible to enforce what the court orders, including asking brokers to remove ClothOff from their platforms,” explains Harden.

It won’t be an easy task. There are hundreds, thousands of ads and bot options that use AI for these purposes. There are thousands of bot messages on And they add at the end, after the link, as a certain warning: “Do this carefully.” Reddit and Telegram also offer comments and access to these types of services, many of them linked to ClothOff, which has purchased at least 10 similar services with names like Undress AI Video, UndressHer, PornWorks, DeepNudeNow, Undress Love, DeepNudeAI, AI Porn or Nudify, according to the lawsuit.

From the boom average of 2023 and other cases that made the news, these app They just got better, like the whole AI industry: better images, more options, ability to add videos. With simple searches, this newspaper found Telegram bots that allow you to upload a photo for free and convert it into any image in a sexual position. Despite the small hiccups (“What AI page do you use to edit clothes in photos? I used ClothOff, but they already closed it and I couldn’t find another similar one,” asks an anonymous user on

The danger of child pornography and any other imaginable harm is clear: “Direct access to ClothOff’s technology allows users to create non-consensual child pornography and intimate content, which gives the ability to generate more extreme content and better evade detection systems. This direct access has also given rise to numerous copycat websites and applications that facilitate the creation of the same type of material,” the complaint reads.

Some places like California or Italy say they have blocked access to some of these applications in their territories this year. For now they seem like small victories in a long war. “ClothOff was designed to be easy to use and completely anonymous,” says Harden. “All it takes is three clicks to generate nude images, and the site doesn’t require any kind of age verification,” he adds. Google Trends shows fall 2023 spike in ClothOff searches, but look for company name or more generic term deep nude they just changed.

A constant drip

Cases in Spain and around the world continue to occur. In July, a 17-year-old Valencia resident was arrested for uploading undressing photos taken from networks of 16 high school classmates and victimized friends to his Instagram profile. The young man wanted to show off his computer skills and earn some money. The same thing happened to a 15-year-old boy in Palma, who stripped the images of five classmates. He also spent the month of February with a group of young people in an institute in Barcelona. Last October, Japanese police arrested a 31-year-old man accused of creating more than 20,000 images of 262 women, most of them famous. He had earned around 7,000 euros by selling access to those fake images. In Japan there had already been an arrest in April for selling obscene images of women.

In 2024, an investigation of the Caretaker allowed us to discover that the creators of ClothOff were two people living in Belarus, Alaiksandr Babichau and Dasha Babicheva. A former employee of the group of companies behind ClothOff said this this summer Der Spiegel that all its employees come from countries that belonged to the former Soviet Union. He also detailed marketing campaigns in several countries for around 150,000 euros on Telegram channels, forums like Reddit or 4chan. According to company documents that have seen the Spiegelthe objective of app They are “men aged 16 to 35” with interests in video games, Internet memes, right-wing extremist ideas, misogyny and Andrew Tate, a influencer Misogynist detained on trial for rape in Romania.

In a display of cynicism, one of the questions on ClothOff’s website is whether “it is safe to use ClothOff.” The answer only takes into account the privacy and security of users, not the victims who have to see their bodies treated as commodities: “Your photos are processed securely, without saving or sharing them, making it one of the safest platforms for ‘undressing’ photos online. Our tool deep nude online processes your files in the cloud, without storing or sharing them. All content is automatically deleted after it is generated.”